Understanding Real Estate Investment for Quants

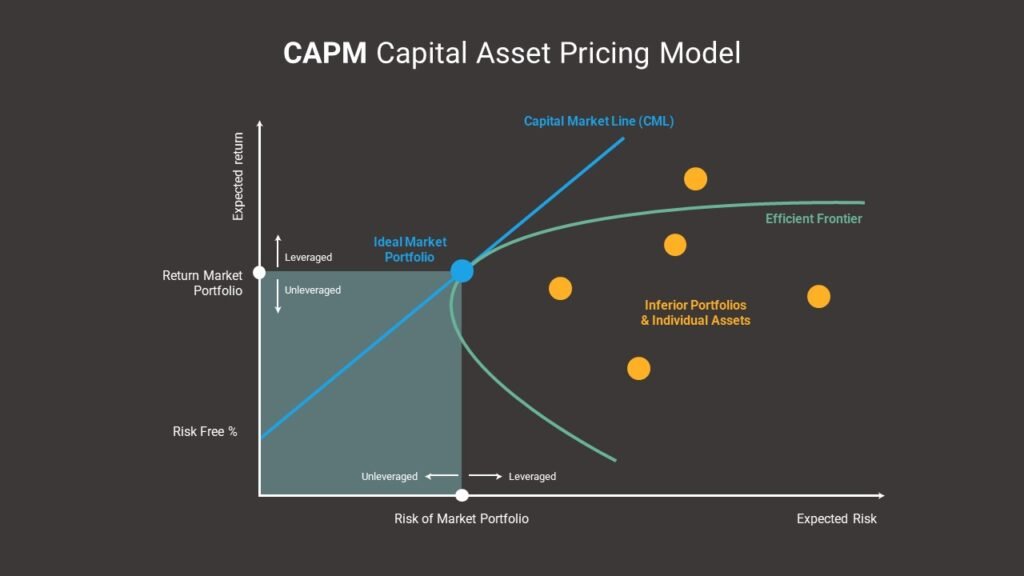

Are you looking to build your wealth and secure your financial future? Real estate investment could be the key to unlocking your financial success. In this comprehensive guide, we will delve into the world of real estate investment, exploring its core principles and concepts, and providing you with valuable insights to craft effective investment strategies. Understanding Real Estate Real estate is a tangible asset class consisting of properties, land, and resources. It plays a pivotal role in the global economy and is a cornerstone of wealth accumulation for many individuals and organizations. To navigate this intricate landscape, it’s crucial to grasp key concepts and principles. Core Principles and Concepts Building Investment Strategies Creating successful real estate investment strategies involves careful planning and consideration of your financial goals. Here’s a step-by-step approach: 1. Define Your Objectives: Clearly outline your investment goals, whether it’s income generation, long-term wealth, or a mix of both. 2. Budget and Financing: Determine your budget and explore financing options, including mortgage rates and terms. 3. Property Selection: Choose properties that align with your goals, budget, and risk tolerance. Analyze potential cash flow and appreciation. 4. Diversification: Spread your investments across different property types (e.g., residential, commercial) and geographic locations. 5. Risk Assessment: Evaluate and mitigate potential risks, including market fluctuations and unforeseen expenses. 6. Property Management: Decide whether to manage properties yourself or hire professionals. 7. Exit Strategy: Develop a plan for exiting investments to maximize returns. Concepts related to Real Estate investment Real estate investment offers a multitude of strategies, each tailored to different financial goals and risk tolerances. To make these strategies more accessible, they can be grouped into five categories: Business strategies, Starter strategies, Wealth building strategies, Debt strategies, and Passive strategies. Let’s delve into each category and explore these 15 strategies in detail. Business Strategies Starter Strategies Wealth Building Strategies Debt Strategies Passive Strategies Remember that these strategies are not mutually exclusive, and successful investors often combine them at different stages of their real estate journey. Conclusion Real estate investment is a dynamic and rewarding endeavor. By understanding the fundamental principles, concepts, and strategies, you can navigate this landscape with confidence. Remember that success in real estate investment requires a blend of knowledge, calculated risks, and a well-thought-out plan. Begin your journey towards financial prosperity today, and let real estate be your path to wealth accumulation. Frequently Asked Questions (FAQs) Q1: What is the best type of property to invest in? A1: The best type of property depends on your goals. Residential properties are great for rental income, while commercial properties can offer higher returns but come with more complexity. Q2: How can I finance my real estate investment? A2: Financing options include mortgages, loans, private money, and partnerships. The choice depends on your financial situation and investment strategy. Q3: Are there tax benefits to real estate investment? A3: Yes, real estate often offers tax advantages, such as deductions for mortgage interest, property taxes, and depreciation

Understanding Real Estate Investment for Quants Read More »